Introduction

I’d be committing treason not showing this xkcd comic in a sysadmin related article:

With that taken care of, what is this post about exactly? Well, recently I had to wear the sysadmin hat. Here is what wiki has to say about sysadmin:

A system administrator, or sysadmin, is a person who is responsible for the upkeep, configuration, and reliable operation of computer systems; especially multi-user computers, such as servers.

Close enough.

The reason I had to do sysadmin stuff is that I needed to deploy the Elixir game server for the remote team to use. They breezed through the simple tasks and features, and are ready to integrate the networking stuff.

Traditionally, I had a lot of issues with server deployment. Issues were related to DBs, web server setup, logging, and server monitoring. Thankfully, this time around, all these issues were taken care of! Let’s see how…

Database

I decided to use Postgres as a DB, mostly because it’s the recommended DB for Phoenix. The only issue is, it’s not the most usable DB on the planet. You can get into a lot of quirks and configuration issues while setting it up yourself, so that’s why I decided to just avoid that from the get go.

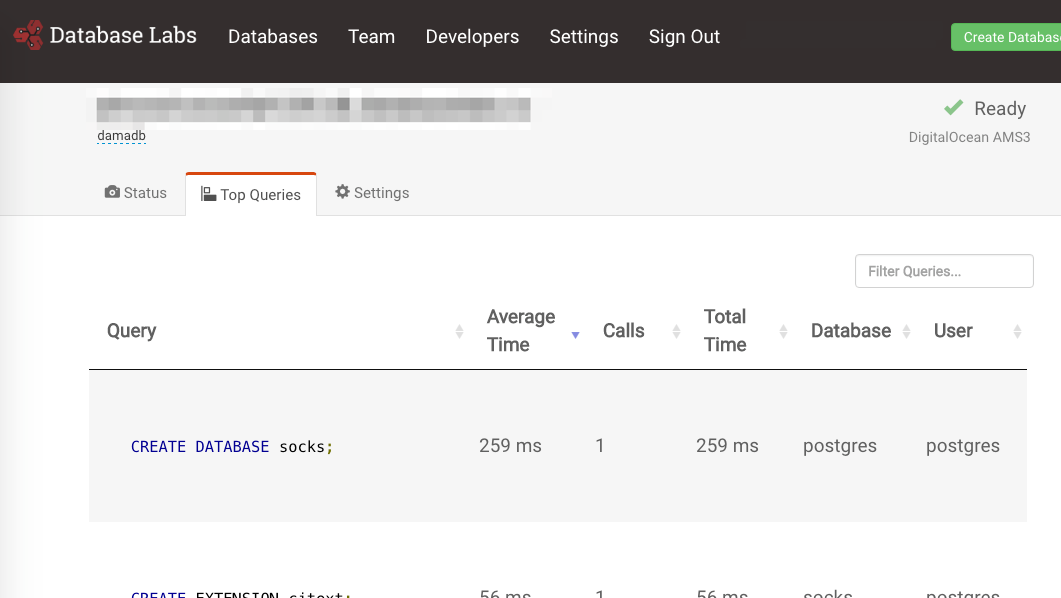

A quick google search for recommended database services near my servers (hosted on DigitalOcean) showed me DatabaseLabs. The reason I felt comfortable going with them is because they only provide the thing that I needed, and nothing else. Postgres hosting on DigitalOcean. Period.

I had a lot of minor issues with the service, in the beginning. The dashboard was kinda crappy, DataGrip didn’t work, and I wasn’t able to navigate and make use of simple resources due to bad UX.

Thankfully, they had a kickass support team. I was able to communicate with them and voice my concerned, which they acknowledged and addressed fairly quickly. So, it’s definitely a :thumbs_up: from me!

Web Server

My previous server was using Apache, and it was a hellish experience to say the least. Too much crap to configure, too much intricacy involved in the way you structure your directories! There is no IDE or magic wand you can just wave here, so things didn’t go well.

Last time, I decided to use a Flask web service, and it is almost unthinkable to use the default web server shipping with Flask, hence I resorted to Apache.

This time around, I am using Elixir! Built with Erlang! Which has the superb Cowboy webserver! Yup, I decided not to bother with nginx nor Apache this time around, and just expose the Cowboy server to the outside world. It’s great, and I’m not complaining for now.

So, the way to solve the web server issue is to eliminate the need to have a web server in the first place! OK, I understand that at some point I may need to install a web server in order to do proxying and load balancing, but for now .. Wheeee!

Logging

Ah … Logging … Such an under-appreciated corner of the backend world. Up until now, working with over 5 different companies with relatively sophisticated servers didn’t have proper logging aggregation. When I would ask for logs to look at, they all pointed me to a bunch of zip files dumped on the server … Really?

The thing is, that’s what most servers come by default with. A file logger with log rotation support. This means, the server writes the logs to a file, and once a duration or files size limit is reached, the log file is compressed, moved elsewhere, and a new file is created. This is cool, but how are we suppose to go through all these logs!

Enter the logging aggregator.

With a logging aggregator, you can send all these logs to a centralized location, and this service would basically provide you with powerful search and lookup tools to properly look through all these logs.

The service I came across and found extremely useful was papertrail. It had everything I could ask for:

- Regex search and filtration

- Generous free trail

- Elixir logger library

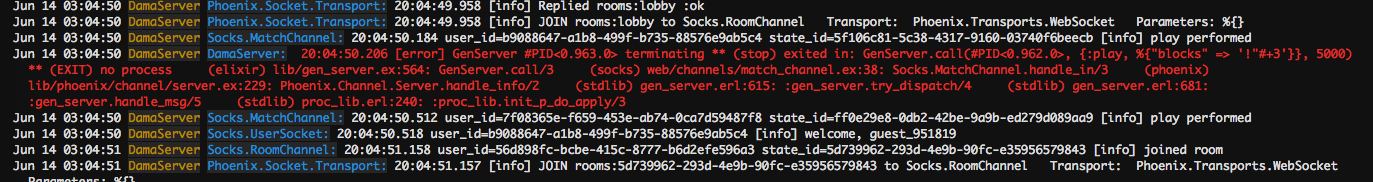

With papertrail, I can finally run my phoenix server in detached mode without worrying about losing any logs, since they are all sent to papertrail where I can see them update live!

A few honorable mentions include logDNA and logentries. They look super promising as well, but I am simply too satisfied with papertrail to bother with exploring alternatives.

Monitoring

We reach our final point, monitoring. During the EC2 days, I had setup New Relic, which was a terrible experience. After adding their library into my server code, it didn’t really perform well, and I could’ve sworn my server performance was degraded. In either case, I really hated the fact that I had to change my server code just to get simple monitoring.

AWS so called “Cloud Watch” is the absolute worst. I’ve also tried several solutions for monitoring any ubuntu instances, and they were all annoying to deal with … Monitoring was another thing I didn’t get right in the past.

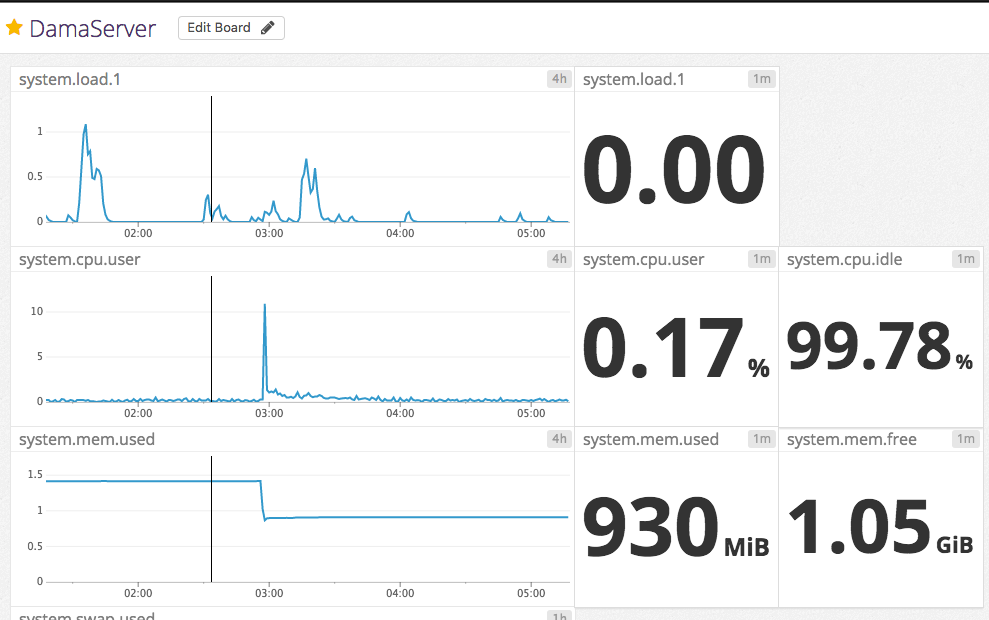

This time around, again, there it was. The best monitoring service I could ask for, DataDog. Just be deploying a small script, I now have a daemon up an running on my server, sending all the relevant stats the the data dog server, for me to view conveniently from their dashboard.

Data dog’s great features include a fully customizable dashboard, where you choose the stats you wanna monitor, generous free tier (again), and slick UI, integration, and many other neat features. I am really happy with it.

Conclusion

I didn’t need to use Docker this time around, nor am I bothering with fancy continuous integration nor deployment… It’s time to get just the bare minimum done and ship. I’ve actually been preaching this a lot to people around me making the same grave mistake over and over again …

“We must perfect this!”, “It’s all about the technology!”, “We want the best code EVAR!!” … No.

The whole idea of startups that deliver consumer products is to MITIGATE the risk of technologies and focus on delivering the best user experience possible. Stop bogging down on the details of the technology stack, and focus on delivery, shipment and customer satisfaction. This is mostly due to endless iterations on the product facing side, not the tech side....

HEED MY WORDS!